Naïve Yesterday, Lustrous Tomorrow

Twenty to thirty years seems to be the period of time after which it becomes respectable to begin the art historical study of an art project, artform, event or movement. It is indeed in the year 2020 that, in Europe, artists and curators who made internet art in the 1990s and early 2000s started getting an increasing amount of emails from PhD students, funded postdoctoral researchers, curators and other scholars with requests for interviews, documentation, and contextual information. Suddenly, twenty or thirty year old art does not look outdated but can be re-seen, to claim the time of now as its new cycle of, now art-historical, existence.

Only a couple of years ago I struggled to explain to my students why exploring a project made in 1996 was meaningful. As we near the thirtieth jubilee of the World Wide Web, the rules of the game change. Projects that looked naïve yesterday appear fresh, almost lustrous, like unexpectedly discovered early designs and blueprints of things ubiquitous today, or as traces of other paths that might have been taken. They appear in a new light, one of changed screens indeed, but also one of new ideation.

What is this new light? Is it a question of inevitable historical cycles and an attempt to see abstract principles of the spiral of history traversing our own lifetime? Is it a question of "aging well"? Is it a question of being stuck with the same problems that bear new problems that bear new problems until the cascade overflows, perhaps in the forms of street protests? Or/and is it that we find ourselves in the moment of another reconsideration of human-technical relationships (with advances in artificial intelligence, new language models, pervasive data practices) that let us see the analogies to such previous moments, some of which are, coincidentally, twenty or so years old?

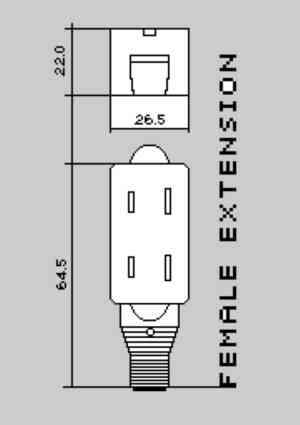

And what does it mean, anyway, to age well? Browsing recently, I came upon some sociology and critical theory research on consumerism, popular in the late 1990s - early 2000s, mostly validating consumption as a practice of individual identity-building. This work has not aged well at all. In the times of climate damage, such postmodernist explorations seem absurd. On the other hand, work such as Cornelia Sollfrank’s Female Extension (1997), that automatically generated female artists and their works, is an early precursor to the automation of creation, “style transfer”, interpolation and other augmentation techniques performed by machine learning (ML) models today. Using AI agents in art and music, as well as text, is boosted by the latest ML models, especially auto-regressive language models such as GPT-3 (generative pre-trained transformer 3), an ecological disaster due to its massive energy usage, whose PR makes it hard to judge whether it works really well or if it is merely really well promoted. (After efficient initial hype about its "dangerous" power, the company that developed GPT-3 received $1bn investment by Microsoft in return for an exclusive license.) In any case, the art world is buzzing around the new keywords.

Today, it would be no problem to build upon the implementation of Cornelia Sollfrank’s project by using new ML models to create identities for the invented artists, generate their unique faces, write their life stories and develop individual styles, alongside a plethora of original art works. A project that points in this direction is an intervention from 2021, a collaboration between Joasia Krysa, Ubermorgen and Leonardo Impett, The Next Biennale Should Be Curated by a Machine. One of the core questions this work poses is why virtual artists generated in abundance to disrupt a model of artistic success based on gatekeeping and artificial scarcity should pretend to take on a human form. As posthuman arguments around ecology are strengthened, they should be joined by the animal artist, plant artist, and the non-human and non-living artist. It is the struggle with the construction of the “artist” that Sollfrank’s project worked with, and its state of being gendered as male and racialised as White and whose importance is derived from the notion of the Subject. The legacy of the concept of the Subject, foundational to the structures of our society: such as the economic (based around autonomous individuals with their own bank accounts and regimes of private property that produce subjects), medical (focused on contained bodies), legal (representing juridically formed subjects), political (reliant on voting subjects) and many others, is hard to shake. Consequently, many others are framed as unimportant non-subjects. Injecting those into the art-and other-scenes in forms that exist, are invented or predicted, is something that is currently being moved out of the hands of the artist and into the realm of artificial intelligence - where new problems of gatekeeping arise.

At the time Female Extension was made, the question of the subject was approached through a feminist, anti-colonial and ecological critique, and it was, as part of the backwash of the postmodern movement, also a question of the author (a category that had also been strongly reworked in historical avant-gardes.) Thinking about technology, or a specific piece of software, as an author, as a collaborator, was a distinctive feature of much of the net art and software art and related phenomena of the 1990s and early 2000s. These art forms conceptualised and practiced the extension of authorship to non-human infrastructural software environments and practices. But the pushback against the idea of the human authorial figure and a lively engagement with code, software and technical infrastructures as active agents was dampened by the general capitalist logic of reward, either of companies’ shareholders or for individual artists. Post-Internet art, for instance, was keen to return to the model of individual success, and it was indeed the collective and self-deprecating dimension of internet art that was discarded first. Suddenly, from early 2010s, nowhere could we see the kind of gestures common in the 1990s and early 2000s: when personal invitations were turned into collective platforms, artists contributed to actions under collective art pseudonyms, and some projects remained anonymous forever. (Perhaps now, the times have changed again, since in the year 2021 all entities nominated for the Turner Prize are collectives.)

There are some differences between how the questions of authorship, the agency of technology and the nature of the artwork were posed twenty years ago and the form into which these questions have now mutated. Projects and platforms of the late 1990s and early 2000s developed new art. While striving for agency shared with technology, the focus often remained on the communal working-out of a new aesthetics. In a sense, it was a practice birthing something aesthetically brilliant. In other words, it was an empiricist, materialist endeavor.

Today, related discussions are driven by related but changed questions: AI making art, curating automatically and personalising all data. Here, attention is squarely on the deep learning models that make art, rather than the art made by the deep learning models. The question is how your data is curated and personalised and what it means politically, rather than the detail of what it is that you are served. Previously, art developed an ethics of being anti-authorial, deprivileging certain forms of subject by making art that embodied such working as aesthetic propositions. Now, not many people are interested in curated data, machine generated text or AI art as a new aesthetic in its own right. The attention is all on the models. Certainly, AI art “outputs”, GPT-3 texts and algorithmic curation encapsulate their working processes. However, it is more often than not that they are non-communicable, proprietary or financially and ecologically expensive to play with, very demanding in terms of computational capacity, or solely driven by damaging economic and political considerations. At some level, we are not interested in what they produce or what they do. We are interested in what they are, whether they are, indeed, extremely good and if so, what happens to the humans. In other words, it is an idealist horizon; we are, once again, asking questions about the ideal, as both a logical projection and a model, and how it shapes society.

The current moment brings us back to the questions of the artist-author, the curator, the subject and the agency of technology in new ways and for a number of reasons. Among them are incommensurability (between the human scale of the users and huge models / platforms / infrastructures delivering results) and non-explainability (of deep learning models, driven by the sector’s desire to hype their products and commercial secrecy as much as the difficulty of explanation). The scale has changed to one of art inhabiting hyper-infrastructure and selecting from its options, while the human figure has faded. On one hand, the question of the human subject-author and technological agency continues, undergirded by our narcissistic obsession with the figure of the human, with its rich history and its wide range of practices of discrimination, and with anxiety around antihuman figures to round it all off. On another hand, the question has morphed, from one foregrounding techno-infrastructural play, organisational aesthetics, and aesthetic brilliance, to one of non-figural entities such as deep learning models, generative forces of technological production, and machinic dynamics, which indicate that the shift to the nonhuman has already occurred (while often intensifying the problems of gender, race, disability and ecology).

We are used to the problem of media art becoming defunct. The technological age is brutal. Conceptually, however, in today’s moment of AI hotness, the projects we were involved with seem to have put pen to paper, starting to draw the lines that by now have subsumed our field of vision.